Das Wichtigste in Kürze:

- Definition: Various terms like GAIO, AIO, GEO, and LLMO describe optimizing websites for language models and AI search engines to increase their discoverability. LLMO aims to help companies prominently place their brand, services, and products in the outputs of leading generative engines such as ChatGPT, Google Gemini, and AI Overviews (formerly Google SGE).

- Importance of Retrieval Augmented Generation (RAG): RAG is a framework for improving the quality of LLM-generated answers. The model relies on external knowledge sources to supplement the LLM’s internal representation of information. This way, a language model doesn’t need to be continuously retrained.

- Optimization for external knowledge sources: It’s more effective to improve the discoverability of your content in external knowledge sources used as references by language models, rather than trying to directly influence the models’ training data.

- Adaptation of SEO strategies: For effective OnPage-LLMO OnPage LLMO, content should be relevant and appropriately formatted to stand out as reliable answers in AI-enhanced search engines and language models. In the context of OffPage-LLMO participation on database and aggregator websites as well as online-PR is essential.

Generative experiences are coming – regardless of whether your digital brand is ready:

- Google SGE (AI Overviews) was rolled out in the USA after Google I/O on May 14, 2024

- AI Overviews were rolled out in Germany, Austria, and Switzerland on March 26, 2025

- More and more chatbots besides ChatGPT, such as Google Gemini, Microsoft Copilot, Grok, and Anthropic’s Claude, are emerging

- ChatGPT has been displaying links more prominently since March 29, 2024

So it’s high time you actively engage with optimization for language models and chatbots. In this guide, you’ll learn:

- Why optimization for language models is often misunderstood

- Why SEO isn’t dying but evolving and has even become more important

- Why Retrieval Augmented Generation is the future

- And which concrete steps you must implement to get more traffic via chatbots

Bereit?

-

Last updated: 6. February 2026ChatGPT Search (SearchGPT) Optimization: The Guide

Last updated: 6. February 2026ChatGPT Search (SearchGPT) Optimization: The Guide -

Last updated: 6. February 2026Google AI Overviews: What’s Changing for SEO & SEA in 2025

Last updated: 6. February 2026Google AI Overviews: What’s Changing for SEO & SEA in 2025 -

Last updated: 10. February 2026Understanding Google AI Mode: Overview & Functions

Last updated: 10. February 2026Understanding Google AI Mode: Overview & Functions -

Last updated: 10. February 2026SEO Trends 2026: Developing Strategies for the AI Era

Last updated: 10. February 2026SEO Trends 2026: Developing Strategies for the AI Era

What is Large Language Model Optimization (LLMO)?

Typical Definition from the Internet

LLMO stands for Large Language Model Optimization. The term describes a series of measures to influence the answers of chatbots such as ChatGPT, Google Gemini, and Claude, or LLM-based generative experience systems such as Google SGE and Perplexity AI.

One thing you should know about this definition:

There’s currently no unified term for this practice. Common terms are:

- Large Language Model Optimization (LLMO),

- Generative Artificial Intelligence Optimization (GAIO)

- Artificial Intelligence Optimization (AIO)

- Answer Engine Optimization (AEO)

- and Generative Engine Optimization (GEO).

Each of these terms describes different approaches and scopes.

Our Definition

LLMO aims to help companies prominently place their brand, services, and products in the outputs of leading generative engines such as ChatGPT, Google Gemini, and Google AI Overviews.

Why Is the Definition Difficult?

While the training data of a language model can be influenced, this would have to happen on a large scale to see an effect. In my view, this will hardly be possible or profitable for companies. For this reason, I deliberately leave out this aspect.

The optimization leverage from my experience lies in Retrieval Augmented Generation…

Retrieval Augmented Generation (RAG)?

Large language models sometimes hit the nail on the head with certain questions; other times they output random information from their training data. When they occasionally sound like they have no idea what they’re saying, it’s because they actually don’t.

LLMs know how words are statistically related, but not what they mean.

What is Retrieval Augmented Generation (RAG)?

RAG is a framework for improving the quality of LLM-generated answers: The model relies on external knowledge sources to supplement the LLM’s internal representation of information.

Implementing RAG in an LLM-based question-answer system has two main advantages:

- The model has access to current, reliable facts

- Users can access the model’s sources. This allows its statements to be checked for accuracy – making them more trustworthy

RAG also reduces the need to continuously train the model with new data and update its parameters when something changes.

RAG Explained Simply

Appearing in the output of a static LLM could be valuable for your brand’s visibility but won’t drive traffic to your website.

Platforms like ChatGPT-4, which are connected to the internet, improve LLM outputs by retrieving current, relevant content from search engine indexes (external knowledge source). This process is called RAG.

It’s the combination of the LLM’s performance with a selection of highly relevant content from the internet that enables the chatbot to produce a more current and factually accurate output.

What you should understand now:

Language models and chatbots based on them always need a search function due to their mode of operation to deliver current and correct answers. That’s why ChatGPT also uses Bing-Search. SEO therefore doesn’t become less relevant but gains a new dimension.

An Example of RAG

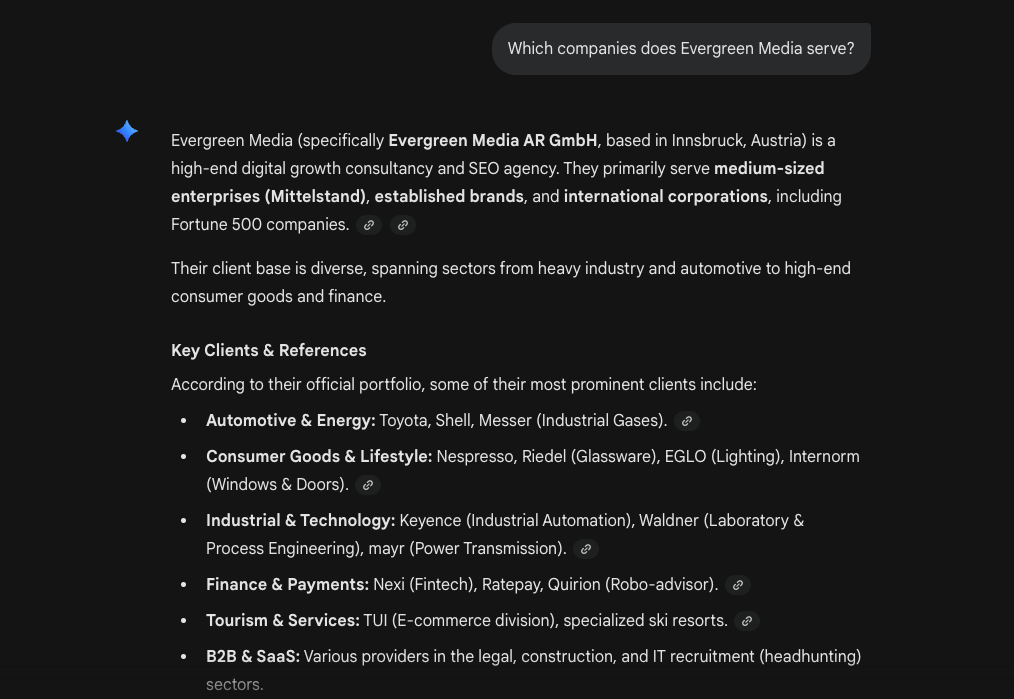

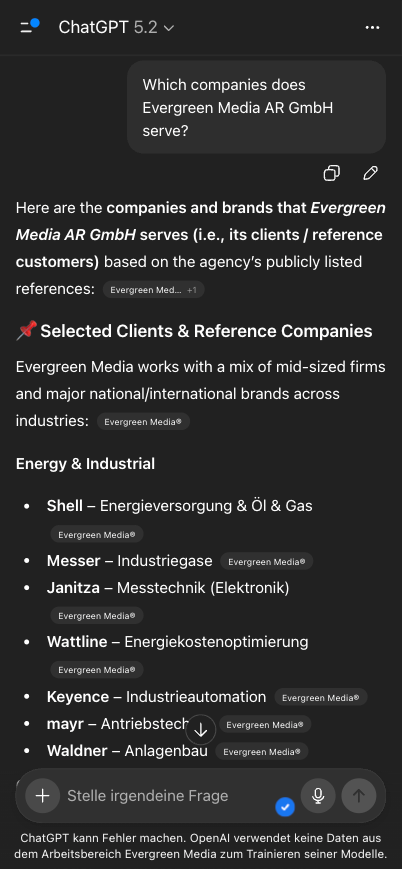

The following screenshots show how Retrieval Augmented Generation can look in practice:

This information is not part of GPT-4’s training data. ChatGPT instead searched for this information via Bing and then formulated it through the language model.

And another example from Google Gemini:

As mentioned at the beginning, ChatGPT has recently been displaying links even more clearly, which increases traffic potential.

As mentioned at the beginning, ChatGPT has recently been displaying links even more clearly, which increases traffic potential.

Can We Optimize for Language Models with RAG?

Optimizing for RAG-LLMs is similar to optimizing for Featured Snippets, but with more variables and less predictability. Success depends on (sur)passing the format and relevance of existing, high-performing content.

Principles of Optimization for Language Models

1. While it’s theoretically possible to influence LLMs’ training data, this would have to happen on an extremely large scale.

On one hand, this will hardly be possible for companies; on the other, the results are not controllable.

2. Language models aren’t continuously completely retrained but use RAG to deliver current information.

The leverage for companies isn’t manipulating an LLM’s training data but improving discoverability in the respective external knowledge source.

3. Optimization for language models and chatbots is about answers instead of URLs.

In SEO, we split pages by search intent and keywords – in GAIO, everything revolves around answers.

It’s possible that topic pages will regain importance. After all, with chatbots and AI-enhanced search engines, the smallest possible part isn’t the URL but the answer.

4. We optimize our discoverability in the respective external knowledge sources for RAG.

Specifically, this means:

- For ChatGPT, we optimize for Bing search

- For Gemini and AI Overviews, we optimize for Google search

Those who enjoy high discoverability in search engines will transfer this visibility to chatbots.

5. User inputs in chatbots are more complex and have more nuances, requiring keyword research and answer coverage to change.

In my opinion, classic keywords will only represent pillars to which we provide appropriate questions and answers.

We find the right questions through:

- “People Also Ask” (PAA)

- Follow-up questions from conversations with Google, Bing, ChatGPT, and Perplexity

- Customer surveys

- Sales team

In this episode, learn more about keyword research in times of generative engines:

OffPage-LLMO

Specifically, beyond your own website, it’s about:

- Database websites: e.g., Crunchbase, Sortlist, or FirmenABC

- Knowledge aggregators: large, moderated UGC websites like Reddit, LinkedIn, YouTube, and Wikipedia

- Large publishers: like The Verge, Financial Times, or Forbes

Our Goal:

- We gain visibility because our mention on these platforms is directly picked up through RAG. Example: We appear in a list of the best GAIO agencies in Germany

- We make it into LLMs’ training data through these platforms

The respective external website/platform must not have blocked the relevant bot if we want to use it for optimization.

What do you need to consider?

Here you’ll find a list of large websites and which bots they’ve blocked. And here’s another list with domains Google trusts.

| What is this? | Database websites are classic aggregators with some kind of listings, e.g., business directories, review platforms, etc. |

| Examples: | sortlist.de |

| What to do? | – Create a complete profile for your brand – Rise as high as possible in the platform’s ranking: e.g., appear high up on the “Best SEO Agencies in Innsbruck” page |

| What is this? | Platforms where you can publish content that’s moderated. |

| Examples: | youtube.com wikipedia.org |

| What to do? | – Actively participate as a brand on the platforms. – Play each platform’s game well: e.g., build a popular YouTube channel |

| What is this? | Newspapers, magazines, etc. |

| Examples: | theverge.com forbes.com |

| What to do? | – Publish sponsored content to buy your place – Convince journalists to report on your brand with online PR campaigns |

That’s why I’m also pushing the topic of digital PR so much. Linkbuilding is nice, but low-quality articles from Private Blog Networks won’t help you here.

If you want to generate visibility with AI-enhanced search engines and language models, you must be mentioned again and again in large publishers. I explicitly say mentioned because it’s not about backlinks.

It’s about prominence for direct queries (ChatGPT via browsing, i.e., RAG) and enough mentions to possibly stand out in the training data.

OnPage-LLMO

Some will now recommend optimizing for individual answers. I doubt this is the most sensible long-term approach.

Let’s take the case of AI Overviews.

iPullRank writes about its experiments:

The more saturated the topic or vague the query, the more volatility we’ll see in the results day to day.

Garret Sussman, iPullRank, 6. Februar 2024

This sounds neither like a sustainable strategy nor a good time investment. We want to optimize all our content according to LLMO best practices and thus increase the probability of appearing in chatbots.

Pointless micro-work remains pointless.

Your Framework

- It’s always about relevance and format. Rambling prose is the enemy

- We build questions and answers around our classic keywords

- Instead of giving users a 4,000-word article about apple calories, we give them the answer quickly and simply

Language

- Use simple, clear, and information-rich language (think about what works in Featured Snippets)

- Explain technical terms in structured form, short and snappy

Structure

- We want Ranch-Style-Content instead of skyscraper content

- Start long-form content with a brief summary of the most important points or key takeaways

- Start each chapter with a short answer

- Make sure to prepare content so it’s suitable for answers (depending on the language model):

– Length

– Formatting

– Information density - Use known models like pros and cons, comparisons, etc., to deliver good answers

- Orient your website structure on how the LLM structures your topic

- Use structured data

Content

- Look at what’s currently being delivered as an answer and learn from it regarding format and relevance

- Provide unique information or data not found in existing training data or on other websites (Information Gain).

- Add quotes (e.g., from well-known experts or an institution)

- Supplement your content with statistics and quantitative data

- Add source references from authoritative sources

Preparation

- Collect User Generated Content (UGC) and present the insights in a quotable form, i.e., as a helpful answer

Comment